Use case

The application gets a list of comma separated account codes which need to be looked up in an Account table using the IN clause in a dynamic query. The application can not use prepared statements or stored procedures. The objective is to minimize the risk of SQL injection. In this post we will discuss potential SQL injection using dynamic SQL and using an SQL server inbuilt function named QUOTENAME() to minimize the risk of SQL injection.

Setting up Account table and sample data

SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO SET ANSI_PADDING ON GO IF OBJECT_ID('Account') IS NOT NULL BEGIN DROP TABLE Account END GO CREATE TABLE [dbo].[Account]( [Id] [int] IDENTITY(1,1) NOT NULL, [AccountCode] [varchar](50) NULL, [AccountName] [nvarchar](200) NULL, CONSTRAINT [PK_Account] PRIMARY KEY CLUSTERED ( [Id] ASC ) ) GO SET ANSI_PADDING OFF GO SET IDENTITY_INSERT [dbo].[Account] ON INSERT [dbo].[Account] ([Id], [AccountCode], [AccountName]) VALUES (1, N'XOD814', N'Test account1') INSERT [dbo].[Account] ([Id], [AccountCode], [AccountName]) VALUES (2, N'GUR78437', N'Test account2') INSERT [dbo].[Account] ([Id], [AccountCode], [AccountName]) VALUES (3, N'TIY5', N'Test account3') INSERT [dbo].[Account] ([Id], [AccountCode], [AccountName]) VALUES (4, N'KOS370', N'Test account4') SET IDENTITY_INSERT [dbo].[Account] OFF

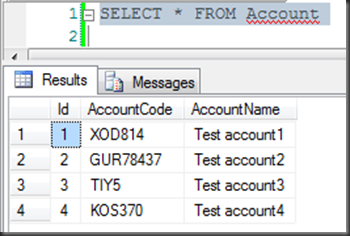

After setup the Accounts looks like below :-

Input data – ‘XOD814,GUR78437,ABCD’); DROP TABLE Account; –’

There are three items in the list which we want to look for in our table using the IN clause :-

- XOD814

- GUR78437

- ABCD’); DROP TABLE Account; –

As you can imagine, It’s the third item which might cause the table to be dropped. Let us see how :-

DECLARE @Query NVARCHAR(max)

SET @Query = ‘SELECT * FROM Account WHERE AccountCode IN (”XOD814”,”GUR78437”,”ABCD”); DROP TABLE Account; –”)’

SELECT @Query AS ‘Executed query’

EXEC (@Query )

IF OBJECT_ID (‘Account’) IS NOT NULL

BEGIN

SELECT ‘Account table exists’

END

ELSE

BEGIN

SELECT ‘Account table got dropped.’

END

GO

If you zoom in to the highlighted statement above :-

A – The actual value ‘ABCD’ is terminated by a single quote (which is escaped here in order to make it a proper SQL statement) and then with a ending bracket and a semicolon making the end of the select statement. The subsequent drop statement become now a qualified correct statement to be executed.

B – The DROP TABLE statement is now terminated by a semicolon followed by double dashes commenting out any possible subsequent statements which might get added by dynamic SQL formation.

Executing the above Sql script would get the table stopped because of the below resulting query

Resulting SQL query

SELECT * FROM Account WHERE AccountCode IN (‘XOD814′,’GUR78437’,’ABCD‘); DROP TABLE Account; –‘)

NOTE: '); closes the original statement and then a second statement (drop table) follows.

QUOTENAME() Function

QUOTENAME() function on a value delimits the value with a character (for example – a single quote) which can also be specified in the function. So, even if the value contains some other characters like quotes or semicolons to inject harmful SQL statements, the value is delimited and those harmful SQL statements will now become part of the string to be searched in the table rather than valid SQL statements to be executed and causing harm!

Below is the same query with all the values enclosed in QUOTENAME() with a single quote as delimiter. So, the client application can split the list of values and enclose each value in QUOTENAME() function before sending the dynamic SQL to SQL server.

DECLARE @Query NVARCHAR(max)

SET @Query = ‘SELECT * FROM Account WHERE AccountCode IN (‘+QUOTENAME(‘XOD814′,””)+’,’+QUOTENAME(‘GUR78437′,””)+’,’+QUOTENAME(‘ABCD”); DROP TABLE Account; –‘,””)+’)’

SELECT @Query AS ‘Executed query’

EXEC(@Query)

IF OBJECT_ID (‘Account’) IS NOT NULL

BEGIN

SELECT ‘Account table exists’

END

ELSE

BEGIN

SELECT ‘Account table got dropped.’

END

GO

Resulting SQL Query

SELECT * FROM Account WHERE AccountCode IN (‘XOD814′,’GUR78437’,’ABCD”); DROP TABLE Account; –‘)

NOTE – ”); can not close the original statement because the single quote is escaped.

Difference when using QUOTENAME()

So – what is the difference? Its just a single quote – highlighted in red below. QUOTENAME() delimits the whole ‘ABCD‘); DROP TABLE Account; –’ with single quotes and in that process escapes the single quote right after ABCD which would have otherwise terminated the string to ABCD which would be then terminated by ); followed by execution of DROP TABLE.

Executed Query without QUOTENAME()

SELECT * FROM Account WHERE AccountCode IN (‘XOD814′,’GUR78437’,’ABCD‘); DROP TABLE Account; –‘)

NOTE: '); closes the original statement and then a second statement (drop table) follows.

Executed Query with QUOTENAME()

SELECT * FROM Account WHERE AccountCode IN (‘XOD814′,’GUR78437’,’ABCD”); DROP TABLE Account; –‘)

NOTE – ”); can not close the original statement because the single quote is escaped.